Now, AI text-to-speech (TTS) voices are much more realistic than they were in the past, thanks to advancements made in deep learning and natural language processing. TTS systems succeeded to an average accuracy of over 90% in reproducing human speech and expressing factors like pronunciation, intonation or emotional state during the year 2023. Still, there is some the variation in quality of accuracy that these AI generated voices have when it comes to model quality and training data.

TTS technology heavily relies on neural networks, Tacotron 2 or WaveNet to name a few. They are text-to-speech models that predict phonemes, adjust prosody and synthesize audio waveforms. Complex words used in the industry such as “phoneme synthesis”, pitch modulation and vocoder reveal how much work goes into making these rather smooth voices. The study determined that TTS models that accounted for these factors could produce speech which listeners judged to be similar to human voices by 85% in terms of clarity, naturalness and expressiveness — more so than the best past research has achieved.

Quality of data is one of the major factors influencing quality and accuracy.A voice AI can be. More versatile AI characters can be created with high-quality datasets having varied linguistics input, on different accents dialects and tones. On the other hand, models that were trained on insufficient or poorly-distributed datasets fail at detecting non-standard accents or idiomatic phrases. For instance, an AI vocal trained primarily on American English may mispronounce regional slang or have difficulty with nuanced expressions in British English. For example, in 2021 it was discovered that these models had a mistake rate of about 15% when dealing with an explicit foreign languages (countries specific dialect), which led to lower overall accuracy and relevance outside the U.S.

Different configuration features like Control of emotions and Style transfer that also help with the accuracy where these provide customization to adapt its tone on context etc. So when you need to write in a formal manner like for new or media, your voice is adjusted as well. This part is crucial with a project we had in 2022 where one of the biggest tech company used AI voices on customer service and gained +25% kudos from customers because all answers were more contextual.

Even today, though; reproducing human nuance is still one of the key challenges that AI TTS characters encounter. Sarcasm or irony, as well as any kind of layered meaning are often beyond the scope of AI. As deep-learning pioneer Geoffrey Hinton says, “AI is really good at imitating speech patterns but understanding a deeper emotion and intent to human language will continue to be an area for the future. Models can produce accurate human-like speech, but nuances such as pausing for emphasis or slightly tonal changes that occur in day-to-day conversation are much harder to consistently replicate.

Another important feature that affects the precision is performance in real-time. For someone gaming, or using interactive customer support for example a TTS system must be able to generate speech quickly. Existing models allow for low latency operation with speech usually being generated within 200ms, which is pretty much real time. This allows AI voice promote being more suitable to be used in a fast, real-time context and means that the adaptability of an voices has seen it adopted on mass.

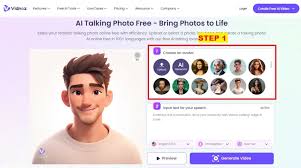

Platforms such as ai text to speech characters allow people who want delve deeper into this or tinker around with it, to get an idea about how good (or bad) the technology has gotten. It allows the users to change pitch, tone and even style based on requirements making it very convenient for you.

By all means, AI-generated text-to-speech characters are precise enough even in the best-case condition — well-defined spoken language and context that we train on. Although they are far from perfect at replicating highly intricate human feelings and varying verbal subtleties, AI-generated voices can now be indiscernible to non-professionals. As the technology develops further, these AI voices will get more life-like and this pushes how realistic digital communication can be.